AI and AI Architecture

Thoughts based on decades of experience

I learned the foundations of current AI - deep learning

through back-propagation - decades ago.

I have thought about it a lot since then.

Summary: providing end/vertical value is good, regardless of algorithm.

The rest is not worth discussing.

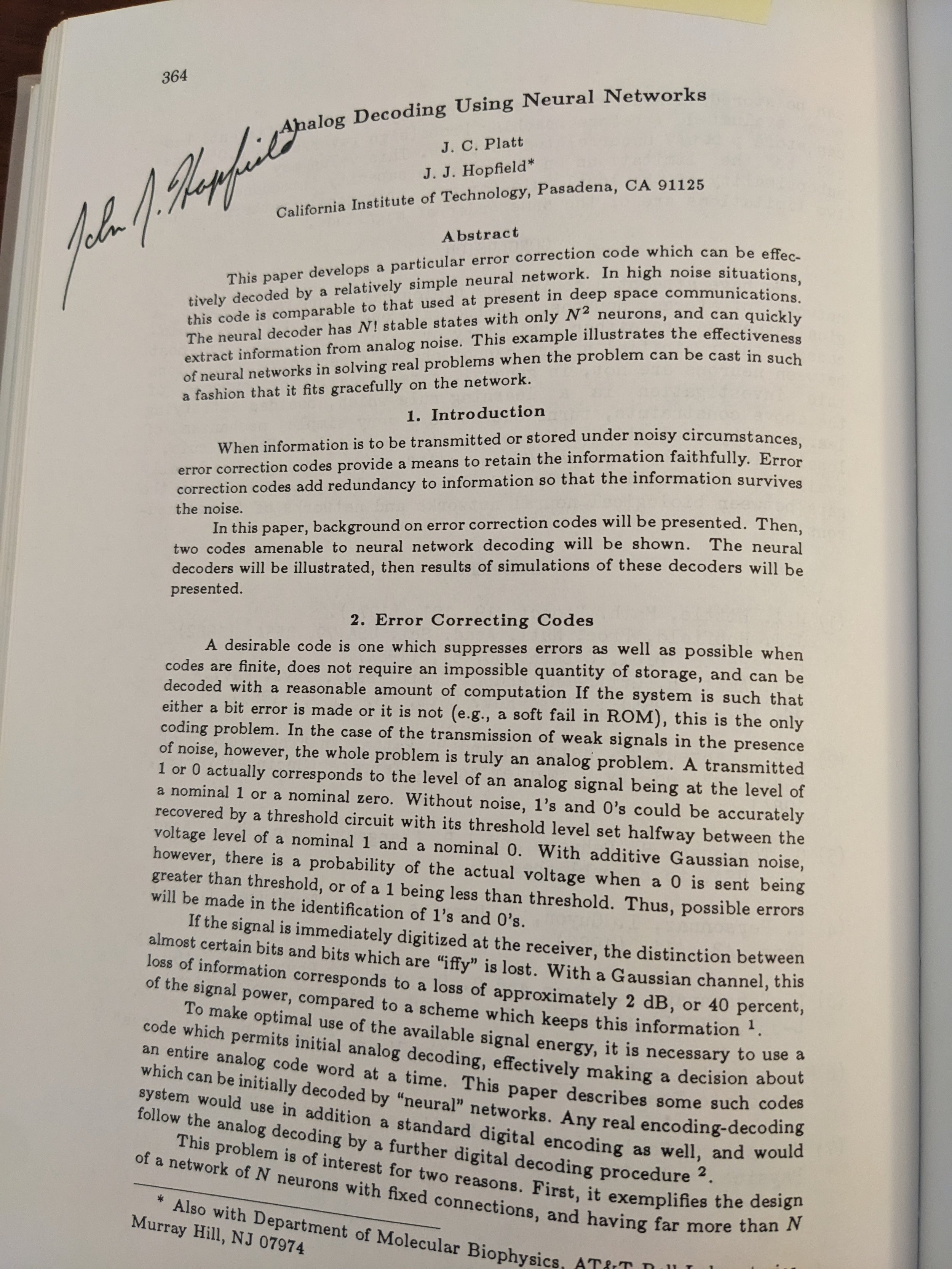

At Caltech, I took a class in a new topic - "neural networks" - taught by the inimitable John Hopfield. I found the topic fascinating, following up with a seminar (which included hearing a talk by Terry Sejnowski*, sitting with Bela Julesz**, and meeting many other now-luminaries***). After watching the infighting at the first IJCNN in San Diego, I decided to rejoin Bell Labs and return to Telecom, but I never stopped being fascinated by this technology, through AI Winters and the emergence of today's LLMs. As an outsider, I get to look at the technology and business of AI from a bit of a different perspective. Here's are some selections.

*For those who are unfamiliar, Terry Sejnowski used machine learning (back propagation) to "teach a computer to read aloud." That's right - he created an AI Text-To-Speech system. In 1986.

**For those who are unfamiliar, Bela Julesz did fundamental research into visual perception, including elegant experiments with the Random Dot Stereogram. That work underlies today's Virtual Reality.

***I specifically remember Christof Koch in biological neural nets and Demetri Psaltis in optical neural nets. Carver Mead was doing analog electronics inspired by the retina's neural networks, work that led to the FOVEON camera.

-

Epiplexity

Explore epiplexity, a new concept in Information Theory that has important implications for the AI industry. Click here.

-

Booting Language and Common Ground

Several thoughts converge as we explore what it takes to agree on what words mean. See what I mean here.

-

Thinking backwards

What if - at least initially - Reasoning developed as yet another tool of System 1 and only later became superior. Or did it ever? Read more.

-

Hummingbird Defeats AI

I took a picture of a cloud (or contrail) and asked GPT to alter it a little. The results were… not good. Read more.

-

Four R's Architecture

While working with Textician, I created a number of blog posts on general AI architecture. While these posts were done in 2018, and somewhat biased to what Textician was doing, they still hold up remarkably well. They are presented here without updates. The first two establish the framework for the remaining discussion.

It turns out that Reasoning (or System 2) is really thinking slow: just 10 bits/s!

Learning, Predicting and Reasoning (The Four R's Framework) (pdf)

A Bit of Context (on Recognition and Reasoning) (pdf)

An Accurate Assessment (on the difficulty of evaluating models) (pdf)

Bring Down the Noise; Bring in Da Funk (on Representation of data) (pdf)

Pay Attention (on use of Reasoning's limited resources) (pdf)